SIAT-PCQD: Subjective Point Cloud Quality Database With 6DoF Head-Mounted Display

Xinju Wu, Yun Zhang, Chunling Fan, Junhui Hou, Sam KwongIntroduction

We focus on subjective and objective Point Cloud Quality Assessment (PCQA) in an immersive environment and study the effect of geometry and texture attributes in compression distortion. Using a Head-Mounted Display (HMD) with six degrees of freedom, we establish a subjective PCQA database named SIAT Point Cloud Quality Database (SIAT-PCQD). Our database consists of 340 distorted point clouds compressed by the MPEG point cloud encoder with the combination of 20 sequences and 17 pairs of geometry and texture quantization parameters.

Keywords: Point clouds, subjective quality assessment, quality metrics, virtual reality, six degrees of freedom (6DoF).

Publications

[1] Xinju Wu, Yun Zhang, Chunling Fan, Junhui Hou, and Sam Kwong, Subjective Quality Database and Objective Study of Compressed Point Clouds with 6DoF Head-mounted Display, IEEE Transactions on Circuits and Systems for Video Technology, 2021. (arXiv, Database@ IEEE Dataport)

[2] 吴鑫菊,张云,樊春玲,朱林卫,李娜,皮金勇. 面向人眼视觉任务的点云主观质量评价数据集,AVS-M6065, 2020. (Document)

Subjective Experiment Settings

The whole workflow before conducting the experiment, which mainly includes preprocessing, encoding, and rendering.

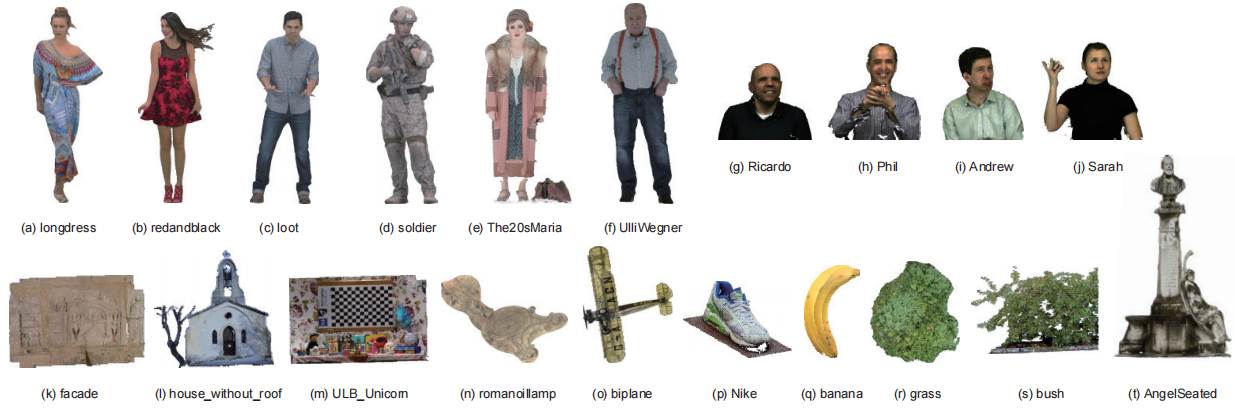

- Preprocessing: The sequences are selected from different repositories, which means their sizes, positions, and orientations vary. However, we desire that point clouds are exhibited in life-size rendering to achieve realistic tele-immersive scenarios. So we normalize sequences to remain point clouds within a similar bounding box (600, 1000, 400) in the preprocessing stage to deal with this issue. The source models have been processed with sub-sampling, rotation, translation, and scaling, except four sequences Longdress, Redandblack, Loot, and Soldier from the 8i Voxelized Full Bodies Database. Additionally, the point cloud encoder VPCC fails to deal with decimals, so that the positions of points were through round operation and then the duplicate points were removed. In particular, it is unnecessary to have integer conversion for four upper body figure sequences from the Microsoft database, so we just adjusted their positions and orientations in rendering software.

- Encoding: Distorted versions were generated using the state-of-the-art MPEG PCC reference software Test Model Category 2 version 7.0 (TMC2v7.0). The V-PCC method takes advantage of an advanced 2D video codec after projecting point clouds into frames. First, a point cloud is split into patches by clustering normal vectors. The obtained patches would be packed into images, and the gaps between patches would be padded to reduce pixel residuals. Then projected images of sequences are compressed utilizing the HEVC reference software HM16.18. QP determines the step size for transformed coefficients in codecs. In V-PCC, a pair of parameters, namely geometry QP and texture QP, regulate how much detail is saved in the geometry and texture attributes of point clouds. As geometry QP is increased, points deviate from their original positions. As texture QP is increased, some color details are aggregated. Similar to the Common Test Conditions (CTC) document from the MPEG PCC, the gaps of geometry and texture QPs were set as 4 and 5, ranging from 20 to 32 and from 27 to 42. As shown in Table I, geometry QP ranks the first in each pair, while texture QP ranks the second in that pair. And we chose a losslessly compressed version as our reference contents.

- Rendering: Point clouds are appropriate to represent the complete view of objects and scenes in immersive applications with 6DoF. Thus, we developed an actively interactive VR experiment software for subjects to observe point cloud models in the 6DoF environment.

SUMMARY OF PRE-PROCESSED TEST SEQUENCES.