Video-based Crowd Counting Dataset in Compression Scenario Download

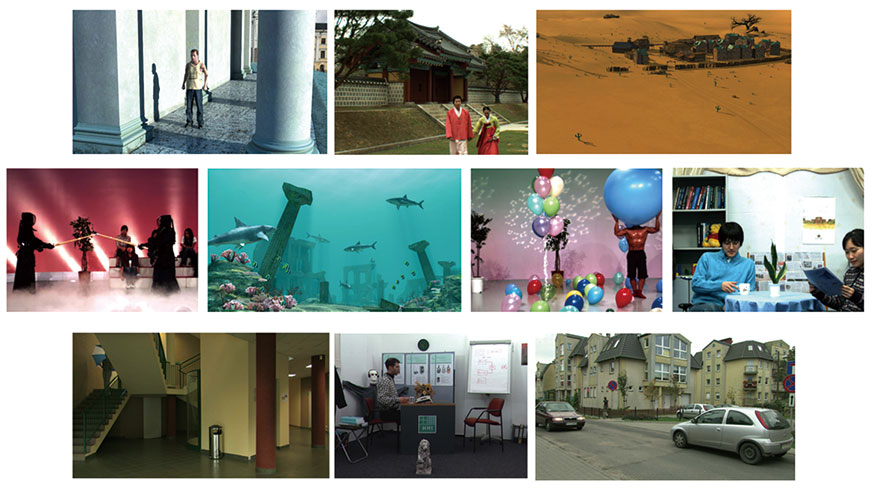

We built up a Video-based Crowd Counting Dataset in Compression Scenario(VCCD-CS)for evaluating video crowd counting methodology on crowd videos with different levels of compression distortion in terms of QP. The testing set of Fudan-ShanghaiTech dataset (FDST) is selected as the source of reference videos. The FDST is a dataset for video crowd counting. It contains 150K frames with about 394K annotated heads captured from 13 different scenes. The training set consists of 60 videos, 9000 frames and the testing set contains the remaining 40 videos, 6000 frames. We encoded the frames of the 40 video sequences in the testing set of FDST, with QP∈{0, 2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24, 26, 28, 30, 32, 34, 36, 38, 40, 42, 44, 46, 48, 50} in HEVC test model version 16.20 (HM16.20) under Low Delay P configuration. The “rec.yuv” is the output distorted videos, which could be decomposed with “yuvtobmp.exe” into frames for convenience of analysis in the future.

Extraction code: z50m

Synthesized Video Database Project Page

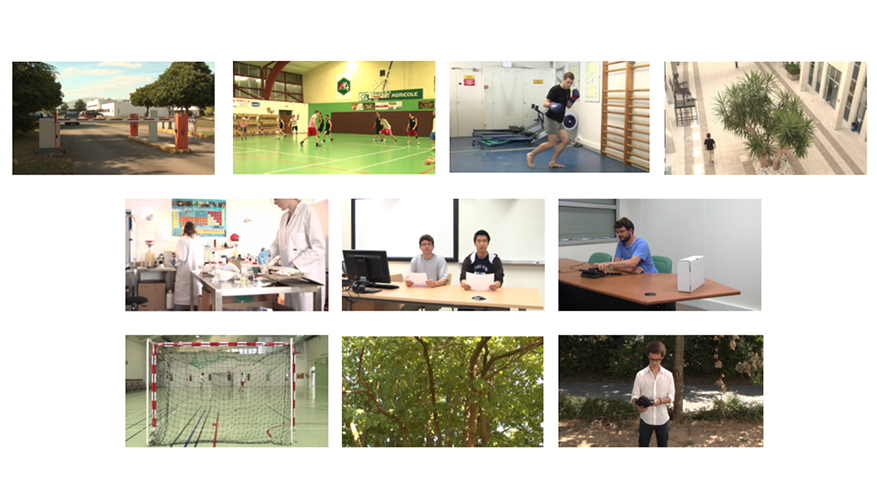

We develop a synthesized video quality database which includes ten different MVD sequences and 140 synthesized videos with resolutions of 1024×768 and 1920×1088. For each sequence, 14 different texture/depth quantization parameter combinations were used to generate the texture/depth view pairs with compression distortion. 56 subjects participated in the experiment. Each synthesized sequence was rated by 40 subjects using single stimulus paradigm with continuous score. The Difference Mean Opinion Scores (DMOS) are provided.

Depth Quality Database Project Page

We develop a stereoscopic video depth quality database which includes ten different stereoscopic sequences and 160 distorted stereo videos in with a resolution of 1920×1080. The ten sequences are from the Nantes-Madrid-3D-Stereoscopic-V1 (NAMA3DS1) database. There are four categories of impairments in the NAMA3DS1 database which are H.264 coding, JPEG2000 coding, down-sampling and sharpening. However, only symmetric distortions are considered in NAMA3DS1 database. Since both symmetric and asymmetric distortions are necessary to study, we generate additional stereoscopic videos with asymmetric distortion. There are 90 symmetrically distorted video pairs and 70 asymmetrically distorted video pairs. 30 subjects (24 male, 6 female) participated in the symmetric distortion experiment and 24 subjects (19 male, 5 female) participated in the asymmetric distortion experiment.

Picture-level JND Database Project Page

We study the Picture-level Just Noticeable Difference (PJND) of symmetrically and asymmetrically compressed stereoscopic images, where the impaiments are JPEG2000 and H.265 intra coding. We conduct interactive subjective quality assessment tests to determine the PJND point using both a pristine image and a distorted image as the reference. We generate two PJND-based stereo image datasets, including Shenzhen Institute of Advanced Technology-picture-level Just noticeable difference-based Symmetric Stereo Image dataset (SIAT-JSSI) and Shenzhen Institute of Advanced Technology-picture-level Just noticeable difference-based Asymmetric Stereo Image dataset (SIAT-JASI). Each dataset includes ten source images. The PJNDPRI and PJNDDRI are provided. PJNDPRI reveals the minimum distortion against a pristine image. PJNDDRI reveals the minimum distortion against a distorted image.

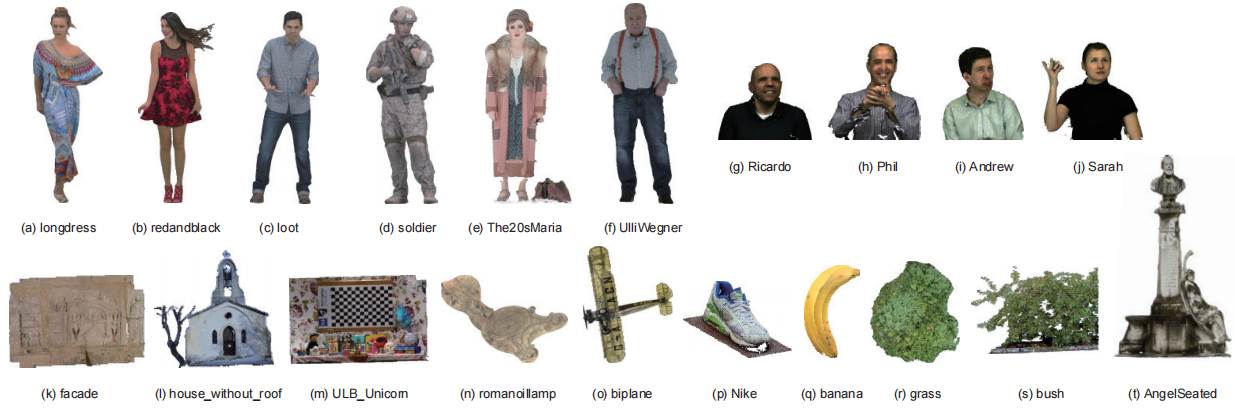

Point Cloud Quality Database Project Page

We focus on subjective and objective Point Cloud Quality Assessment (PCQA) in an immersive environment and study the effect of geometry and texture attributes in compression distortion. Using a Head-Mounted Display (HMD) with six degrees of freedom, we establish a subjective PCQA database named SIAT Point Cloud Quality Database (SIAT-PCQD). Our database consists of 340 distorted point clouds compressed by the MPEG point cloud encoder with the combination of 20 sequences and 17 pairs of geometry and texture quantization parameters.